Appearance

Performance Tuning

This section shows how batch size and concurrency affect performance and how to use these measurements to maximize throughput.

Effect of Batch Size on Performance

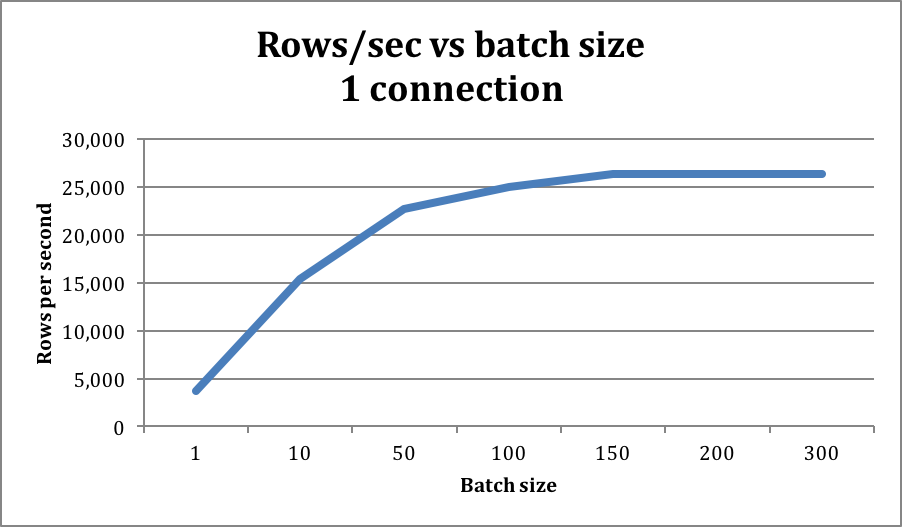

The following graph shows how batch size affects performance when data is inserted using a single connection:

With a single connection, a maximum throughput of ~25K rows per second requires a batch size of 100-150 INSERT statements per transaction. This value can vary depending on the row width for the current table. Therefore, Yellowbrick recommends testing different batch sizes per transaction to identify which batch size is optimal for a given table.

Effect of Concurrency on Performance

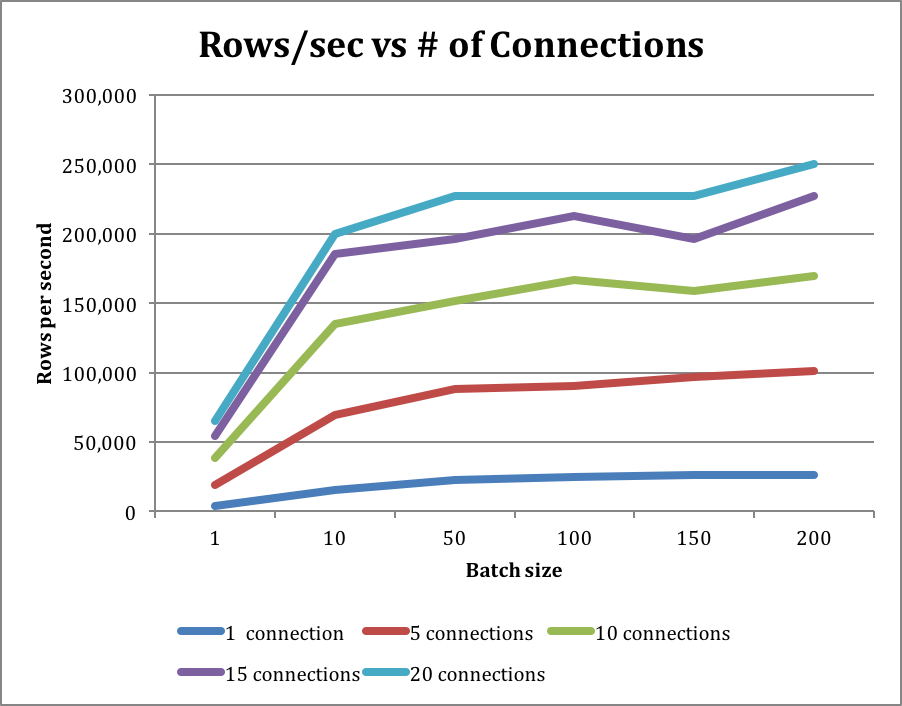

The following graph shows how performance scales as the number of connections and batch size increase:

You can achieve a maximum rate of ~200K rows per second with 15 concurrent connections and a batch size of 50 INSERT operations per transaction. You can also achieve this rate with 20 concurrent connections and a batch size of 10 INSERT operations per transaction. If fewer than 15 connections or batch sizes less than 10 are used, throughput begins to significantly decrease.

Prepared Statements and Command Batching

When you are running multiple INSERT operations, always use prepared statements for better performance, as well as command batching, as shown in the following code example.

Choice of JDBC Driver

For query workloads, the pgjdbc-ng JDBC driver is recommended because it offers significantly higher performance and lower latency over large queries: up to 10x higher throughput.

However, the pgjdbc-ng driver does not support batching of multiple statements efficiently. For inserting data, the regular PostgreSQL JDBC driver performs better and is recommended.

Parent topic:Trickle Loading Data via JDBC