Appearance

Throttling Concurrency

You can use WLM rules to set an action that limits query concurrency when a condition is met. This kind of rule provides another layer of concurrency control for query workloads, in addition to the minimum and maximum concurrency settings that you define per resource pool. Throttling is a mechanism for making sure that lower-priority workloads are queued to protect other higher-priority workloads.

To set up one of these rules, use the Submit, Assemble, or Compile rule type and the Limit Concurrent Queries action. The result is a wlm.throttle rule. A "throttle" rule is primarily used to reduce rather than increase traffic to a pool. This type of rule requires one or two attributes:

- A

countvalue, which limits concurrency to some number of queries (given some condition) - A

throttleNameattribute that defines a concurrency limit per user, role, or application (for example). In theory this name can refer to any classification of queries, but is mainly intended to provide per-user or per-role controls over concurrency.

You can use the count attribute by itself in a rule, or you can use both attributes.

Note: A global system-defined rule (global_throttleConcurrentQueries) sets a maximum query concurrency limit of 500. This rule can be changed, but Yellowbrick recommends that you create your own rule if you want to change this limit. You can use a larger value for the rule order so that a user-defined rule overrides the global rule. Alternatively, you can disable the global rule.

As usual, you need to activate the profile after making rule changes or adding new rules, but you may also need to suspend and resume the instance for this kind of change to take effect. If you see the following error during WLM rule processing, either suspend and resume the instance or drop and re-create the rule:

Rule error for throttle: Cannot throttle with different count values...Examples

For example, write a rule that limits user yb100 to 1 query, effectively turning off concurrency for that user:

if (w.user === 'yb100') { wlm.throttle(1, w.user); }If this user submits two queries in succession, the second query may not start executing until the first is finished. Now rewrite this rule to use a count of 3:

if (w.user === 'yb100') { wlm.throttle(3, w.user); }User yb100 can now start three queries in succession and they can all run concurrently (assuming that slots are available).

If you do not put a condition on the user, concurrency is limited for each user on the system. The following example disables concurrency for all users who run INSERT queries. Each user may run one INSERT at a time.

if (w.type === 'insert') {

wlm.throttle(1, w.user);The following example applies concurrency control at the application level. The ybload application is limited to using resources one load at a time. Any user with load privileges can run a single load, but two concurrent loads would be prohibited:

if (w.application === 'ybload') {wlm.throttle(1);}The effect of this rule is similar to always assigning ybload operations to a resource pool with only one slot (minimum and maximum concurrency values of 1/1).

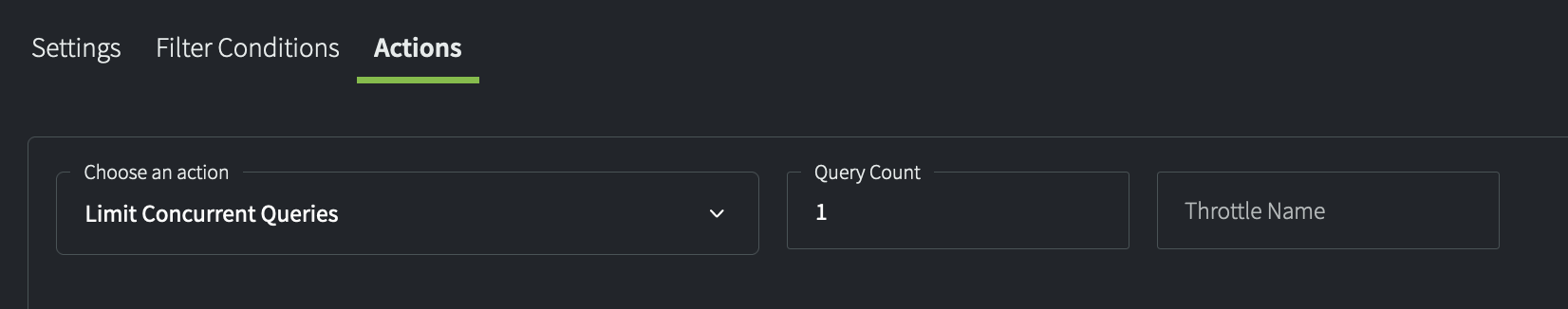

If you create this rule in Yellowbrick Manager, it looks like this. Note that the action chosen is Limit Concurrent Queries, and that no throttle name is required (just the count value of 1).

In the following example, assume that the users are developers who belong to a role called dev. The WLM rule in this case disables concurrency for individual users in that role but enables it at the role level:

if (String(w.roles).match(/dev/)) { wlm.throttle(1, w.user); }In other words, if 5 developers belong to this role, maximum concurrency for the pool when this rule is applied is 5.

Parent topic:How WLM Works